Machination minutiae hadn't necessarily struck us as something of interest, but ask and you shall receive dear readers. Today, we're taking GerbilMagnus' lead and taking readers behind the scenes of our Wi-Fi testing process—we'll also toss in a little theory and practice along the way. If you want to try our methods at home, know up front that you don't necessarily have to replicate our entire test setup to start seeing useful results for yourself. But if you want to put the latest and greatest mesh gear through the gauntlet, we'll absolutely cover everything from top to bottom before we're done.

Why we run complex tests

Most professional Wi-Fi tests are nothing more than simple Internet speed tests—set up a router or mesh kit, plop a laptop down 10 feet away, and let 'er rip. The idea here is that the highest top speed at close range will also translate into the best performance everywhere else.

Unfortunately, things don't generally work that way. All you've really measured is how fast one single download from one single device at optimal range and with no obstructions can go—which is usually not the thing that's frustrating real-world users. When you're mad at your Wi-Fi, it's rarely because a download isn't going fast enough—far more frequently, the problem is it's acting "flaky," and clicking a link results in a blank browser screen for long enough that you wonder if you should hit refresh, or close the browser and try again, or what.

The driving force behind our Wi-Fi reviews is accurately gauging real-world user experience. And if you accept our premise—that slow response to clicks is the most frequent pain point—that means you don't want to measure speed. You want to measure latency—specifically, application latency, not network latency. (Application latency means the amount of time it takes for your application to do what you've asked it to, and it is a function of both network latency and throughput.)

The most challenging workload we throw at our Wi-Fi is usually just what we said in the beginning: Web browsing. When you click a link to view "a webpage," what you're really downloading isn't a single page—it's typically a set of tens if not hundreds of individual resources, of varying sizes and usually spread over multiple domains. You need most, if not all, of those resources to finish downloading before your browser can render the page visually.

If one or more of those elements takes a long time to download—or stalls out and doesn't download—you're left staring at a blank or only partially rendered webpage wondering what's going on. So, in order to get a realistic idea of user experience, our test workload also needs to depend on multiple elements, with its application latency defined as how long it takes the last one of those elements to arrive.

Streaming video is another essential component to the typical user's Wi-Fi demands. Users want to stream Netflix, Hulu, YouTube, and more to set-top boxes like Roku or Amazon Fire Sticks, smart TVs, phones, tablets, and laptops. For the most part, the streaming workload isn't difficult in and of itself—streaming services keep deep buffers to smooth out irregularities and compensate for periods of low throughput. However, the streaming puts a constant load on the rest of the network—which quickly leads to that "stalled webpage" pain point we just talked about.

Wi-Fi latency is about the worst pings, not the best

If you set up a simple, cheap Wi-Fi router in an empty house with a big yard and ping it from a laptop, you won't see terrible latency times. If the laptop's in a really good position, you might get pings as low as 2ms (milliseconds). From farther away in the house, on the other side of walls, you may start seeing latency creep up as high as 50ms.

This is already enough to start making a gamer's eyelid twitch, but in terms of browsing websites, it doesn't sound so bad—after all, the median human reaction time is more than 250ms. The problem here is that ping "as low as" 2ms very likely has one or two in a chain of 10 that are considerably higher than that—for a laptop in ideal conditions, maybe the highest of 10 pings is 35ms. For one on the other side of the house, it might be 90ms or worse.

The ping we care about here is the worst ping out of 10 or more, not the lowest or even the median. Since fetching webpages means simultaneously asking for tens or hundreds of resources, and we bind on the slowest one to return, it doesn't matter how quick nine of them are if the tenth is slow as molasses—that bad ping is the one holding us up.

But so far, we're still just talking about a single device talking to a Wi-Fi access point in an empty house, with no competition. And that's not very realistic, either. In the real world, there are likely to be dozens of devices on the same network—and there may be dozens more within "earshot" on the same channel, in neighbor's houses or apartments. And any time one of them speaks, all the rest of them have to shut up and wait their turn.

It’s all about airtime

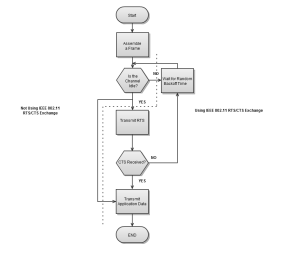

If a Wi-Fi device can "hear" an active signal on its channel—any signal, Wi-Fi or not, intelligible or not—at -62dBm or higher, it has to shut up and wait for that transmission to stop. It also has to shut up and wait if it can hear any valid Wi-Fi transmission on its channel at -82dBm or higher—which is easily sensitive enough to trigger CCA on transmission of devices in a neighbor's house or apartment. (Yes, even devices on a different network with a different password have to respect each others' airtime.)

Although CCA helps keep this scenario from happening as often as it otherwise might, it does still happen. Since there's no out-of-band control channel, two devices which collided packets have no choice but to stop, wait a random amount of time, and then re-assess CCA and try again if it's clear. If they both picked the same random interval, they'll collide again and have to try again. If another device took advantage of the "quiet" while waiting and began transmitting, both devices have to wait for it.

It gets worse when we talk about people trying to use Wi-Fi from "the bad room in the house." A device trying to connect from a long distance or over heavy obstructions isn't only slow itself, it has a disproportionate impact on the entire network. Remember, everything has to shut up while any device is talking—so if you've neglected somebody's bedroom, it's going to hurt the whole house.

Most Wi-Fi devices have 2x2 radios in them—which you may see referred to as AC1200, AC1300, or similar, depending largely on how froggy the marketing department's gotten lately. At close range, that radio will typically connect using 256-QAM modulation and 300Mbps (2.4GHz, 40 MHz channel width) or 867Mbps (5GHz, 80MHz channel width) PHY rate. This PHY rate is the "speed" you frequently see in a Windows connection dialog after joining a network. PHY is not actual data transmission rate, though. Realistically, a user will typically see around 1/2 to 2/3 of that rate for actual data transmission.

So, a typical device with great placement, on a quiet network, can actually move somewhere between 100Mbps and 400Mbps of data, give or take. A 1080p YouTube video requires around 5Mbps of traffic—so each second of YouTube playback consumes somewhere between 1.3 and 5 percent of your airtime. This can be further extrapolated to assume the worst-case network latency imposed by having to work around that traffic is on the order of 12.5ms-50ms. Not bad!

On the other hand... let's examine that "bad room" again. Maybe it's 50 feet and several interior walls away from the router, and a laptop in there can only connect on 2.4GHz with BPSK modulation, with a PHY rate of 30Mbps. It's not a great connection even at that low modulation, and we're only getting about 1/4 of the PHY rate for actual data throughput, so that leaves our 5Mbps YouTube stream occupying more than 70 percent of the available airtime. This user's YouTube playback is going to be slow to start while it buffers, and it's likely to drop resolution, and it'll frequently pause to refill buffers during playback. Just as importantly, it's not only eating 70-plus percent of its own airtime—it's eating 70 percent of everybody's airtime.

When you have poor device placement and active data use, it's not uncommon to see pings go unanswered for several hundred milliseconds—or to see pings even get dropped entirely.

Wi-Fi mesh can make it better

-

This Eero kit consists of three Eero routers, rather than the less-expensive one-router-and-two-Beacon kits.Jim Salter

-

A quartet of tri-band Plume Superpods faced off against a pair of dual-band Nest Wi-Fi devices earlier this year. It didn't go well for Nest.Jim Salter

-

Plume's Superpod design is shown here next to an Orbi RBS-50 satellite, an Eero router, and an Eero beacon. The Superpod is roughly the size of Orbi's power brick, without the actual Orbi.

There's a lot of common "wisdom" out there stating that Wi-Fi mesh makes things worse, since it needs to retransmit data. Now, instead of your laptop connecting directly to the router and transmitting, the signal path goes from laptop to satellite, and satellite to router—double the transmission, double the airtime! Terrible, right? Well, not typically.

Remember how we talked about different modulation standards and rates, like BPSK (at the worst low end) and 256-QAM at the high end? Better placement means negotiating higher PHY rates, with fewer transmission errors. If we put a mesh kit in the same house with that laptop in "the bad room", perhaps its satellite can be placed halfway between the router and the bad room. Now, the laptop connects to the satellite on 5GHz 64-QAM, with a decently low error rate, and the satellite is connected to the router at 5GHz on 64-QAM also. Each end manages to get 1/2 of the PHY rate, meaning a bit less than 300Mbps.

We'll have to cut the total rate down to 40 percent—since retransmission on the same band isn't completely efficient—but that still leaves us with an effective data throughput rate of 120Mbps, and our 5Mbps YouTube stream now occupies only 4.1 percent of our available airtime. That leads to a greatly improved experience not only in the "bad room," but in the entire house whenever somebody in the "bad room" tries to actually use the Wi-Fi.

This still isn't the best way to use our airtime. Although a 2.4GHz, 40MHz-wide channel will only offer a 300Mbps PHY between router and satellite, if our remote laptop connects to the satellite on that 2.4GHz channel, and the satellite uses the 5GHz, 80MHz-wide channel for backhaul, they can transmit simultaneously—CCA won't apply, since front and back haul are on different channels. This means our 5Mbps of YouTube traffic still occupies about three or four percent of the laptop's airtime, but it's only consuming half that much of the 5GHz airtime. It's also reducing latency, because the laptop doesn't end up having to shut up and wait for the satellite's backhaul, or vice versa.

In theory, Wi-Fi extenders should also bring the same kind of improvements that mesh kits do. Unfortunately, in practice, Wi-Fi extenders are almost universally terrible. The very best results we've seen from Wi-Fi extenders boil down to "they didn't actually hurt anything," with more frequent results being significant slowdowns for the entire network with the extender in place.

Really good mesh kits also find ways to split multiple devices up among multiple channels, reducing the congestion between them—a laptop on 5GHz channel 42 won't congest with another on 5GHz channel 155, and neither one congests with another device on 2.4GHz channel 1. Similarly, a good mesh kit will try to avoid having both fronthaul and backhaul on the same channel.

Tri-band mesh kits like Orbi RBK-50 or Plume Superpods have a big advantage in efficient spectrum use, since they can fronthaul to devices on one 5GHz channel and backhaul to the next AP on the other, relegating 2.4GHz to legacy devices only.

Our testing tools

Now that we understand all that pesky theory and practice—how can we test a Wi-Fi network in ways that will expose the real quality of the user experience? First, we need to model realistic workloads where the pain is felt. In years of testing, what we've seen is that Web browsing—both real and emulated—is always the workload that fails first, so it's our canary.

We also want a realistic way to put stress on the network by consuming airtime and forcing those pesky, latency-sensitive Web requests to compete. In the real world, video streaming is the most common workload to accomplish that—so we want to model both video streaming and Web browsing in realistic ways. And since most people have multiple devices on the network, we want to test that way as well—not just a single laptop, but four of them, all having to compete for airtime.

There wasn't a good, inexpensive, widely-available tool for doing this kind of broad spectrum emulation when I began testing Wi-Fi—so I wrote my own modest suite of tools in Perl. These tools are now freely available for download under the GPLv3 license at my network-testing Github repository.

Linux users will have a big headstart on Windows users when it comes to using netburn, the primary tool in the suite. It should "just work" as downloaded with no further dependencies on modern Linux distributions, and issuing a netburn command with no arguments gives you usage information. Windows users have a bit more work ahead of them; the Windows Subsystem for Linux is sufficiently performant to run netburn, but a couple of minor features—such as returning channel and spectrum information from the Wi-Fi interface—are non-functional there.

You'll need another machine running a simple instance of Apache or Nginx as a back-end server for netburn to query. When we test, our HTTP server lives on the WAN side of the router or mesh kit we're testing—so a gigabit LAN is our "Internet connection." This keeps any flakiness in our actual Internet connection from potentially contaminating our results. Our own test server is an Intel NUC with i7 processor; it serves as both upstream router (separating our test kit form our "real" LAN) and HTTP server—but you don't necessarily need something that complex, especially if you only care about testing the Wi-Fi and aren't worried so much about routing performance.

The HTTP server should have files of a given size available. We typically test with 128K and 1MB files, located at https://ift.tt/39K1qI9 and https://ift.tt/36Gm61S. What netburn actually does is issue HTTP requests to our back end server, either singly or in parallel, and it sleeps in between fetches for long enough to limit the overall rate as requested.

Over a 1Gbps wired Ethernet link, our "Web browsing" workload looks like this:

me@banshee:~$ netburn -u http://testserver.lan/128K.bin -c 16 -r 1 Fetching http://192.168.100.11/128K.bin at maximum rate 1 Mbps (with concurrency=16), for 30 seconds. Current throughput: 1 Mbps, Current mean latency: 21.13 ms, Current HTTP 200: 100% OK Current throughput: 1 Mbps, Current mean latency: 21.77 ms, Current HTTP 200: 100% OK Seconds remaining: -2 Time elapsed: 32 seconds Number of fetch operations: 2 Concurrent clients per fetch operation: 16 Total data fetched: 4 MB Mean fetch length (total, all 16 clients): 2048 KB Percent requests returned HTTP 200 OK: 100% Fetch length maximum deviation: 0 KB Throughput achieved: 1 Mbps Mean latency: 21.45 ms Worst latency: 21.77 ms 99th percentile latency: 21.8 ms 95th percentile latency: 21.8 ms 90th percentile latency: 21.8 ms 75th percentile latency: 21.8 ms Median latency: 21.8 ms Min latency: 21.13 ms

Each of our "webpages" consists of 16 128K resources, fetched in parallel. This adds up to 2MB—16 megabits—so our desired rate of 1Mbps means that we sleep in between fetches for long enough that we only fetch one "page" each 16 seconds. This is a pretty reasonable facsimile of a real human browsing real webpages. In production, we add some extra arguments to netburn—like --jitter 500 to introduce 500ms of jitter to avoid either helpful or harmful patterns where Web requests line up with streaming block fetches, -t 300 to make it run for 5 minutes instead of thirty seconds, and so forth—but this is the basic workload.

When testing Wi-Fi, both throughput and network latency will significantly affect application latency. At 100Mbps throughput—which is better single-node throughput than we typically see on a stressed all-devices Wi-Fi network—you're already looking at 180ms or so just to move the data, with any transmission errors, CCA wait times, or packet collisions just ramping things up further.

For our "streaming" workload, we fetch 1MB chunks instead of 128K. There's no concurrency for the streaming workload, we just rate-limit it to 5Mbps for 1080p, or 20Mbps for 4k. This is in accordance with the actual bandwidth used by streaming services at those resolutions.

We use another tool from the network-testing repository called net-hydra to schedule simultaneous netburn runs across all four Chromebooks, and we use a little bash scripting to automate multiple tests in succession and make a really loud "bing" noise when the whole thing's done.

The test environment

-

The top floor of our test house is relatively straightforward—although like many houses, it suffers from terrible router placement nowhere near its center.Jim Salter

-

The bottom floor of our test house is far more of a challenge—there are a good 30 feet or so of packed earth and concrete slab in the line of sight between this floor and the router.Jim Salter

Our test house is a particularly challenging one. The Internet service provider terminated their coax in a small closet off the entry hall. This is better than many homes or apartments where it's literally all the way in a corner, but it's still far from the center of the house. We've got 3,500 square feet total to cover, and the downstairs is especially difficult due to being a partial basement with 30+ feet of packed Earth and a concrete slab in line-of-sight between most of that floor and the router.

Before running Ethernet cable and deploying Ubiquiti UAP-AC-Lite wired access points, there effectively was no working Wi-Fi access in the downstairs floor of the house—but good mesh kits can deliver solid, reliable service there, as does the house Wi-Fi.

One thing we've learned about testing Wi-Fi is that unfortunately, a lot of the kits and routers we test aren't great. In the beginning, we'd sometimes waste hours or even days just trying to get some results, however bad. This is where the extra adapter on each Chromebook comes in handy—we have Ubiquiti access points all over the house, so we connect the Chromebook's built-in Intel 7265 adapter to the device under test, and the Linksys WUSB-6300 adapter to the house Wi-Fi for out-of-band command and control.

In order to avoid congestion, we leave all house Wi-Fi access points locked to 2.4GHz Channel 11 only. This ensures plenty of spectrum remains available for the router or mesh kit we're testing. We also tag out the house microwave to make sure nobody accidentally runs it while tests are ongoing. (We've observed 2.4GHz throughput dropping to well under a tenth its normal rate while the microwave runs.) Although microwaves operate directly on the 2.4GHz spectrum, some can negatively affect 5GHz as well due to unwanted harmonics—particularly in microwaves operated under "low power" settings that really just turn the inverter on and off on a cycle.

Simple throughput tests

-

In our simplest test, we just gobble a bunch of data from one laptop at a time. This makes big numbers but doesn't tell you much about how well a system will perform.Jim Salter

-

The "all stations" test is more interesting: we try to download as much data as possible at each station, simultaneously.Jim Salter

Testing each station (Chromebook) one at a time, with an unthrottled 1MB netburn run, gives us an effective reasonable maximum download speed. Although this gets the largest number, it's not particularly interesting—we include it mostly because readers want to see the big numbers, but a big unthrottled download speed doesn't correlate strongly with good performance when the entire network is under load. This number can be useful for helping to explain particularly bad results at one location, though—for example, in the sample results above, we can see that Nest can't really service our downstairs bedroom at all.

In the second graph, we see the performance of all stations running a simultaneous unthrottled download. This is where things begin to get more interesting, as we uncover additional weaknesses in a system. Nest Wi-Fi is still failing miserably to service the downstairs bedroom—and Eero, which did just fine when testing each station individually, has developed an issue servicing the station in the kitchen.

Since Eero had no problems with that station in individual testing, but it's struggling mightily now, we can conclude that the dual-band Eero node in the living room is struggling to manage lots of simultaneous throughput from multiple stations. To learn more, we'll need to take a look at an excerpt from the raw data:

| hostname | ifchangewarning | freq | freq_after | ap | ap_after | throughput |

| upstairs_bedroom | WARNING! | 5.18GHz | 5.18GHz | livingroom_tv | livingroom_tv | 46.6Mbps |

| downstairs_bedroom | 5.18GHz | 5.18GHz | downstairs_den | downstairs_den | 30.8Mbps | |

| kitchen | WARNING! | 5.18GHz | 2.412GHz | livingroom_tv | livingroom_tv | 10.7Mbps |

| living_room | 5.18GHz | 5.18GHz | livingroom_tv | livingroom_tv | 34.5Mbps |

We can see that both the upstairs bedroom and kitchen stations hopped frequency or access point during testing. The upstairs bedroom station returned to its original setting by the time testing was complete, but netburn's output warns us that not all frequency and associated AP results were the same for the individual returns during testing. We're not really worried about the upstairs bedroom, since its throughput was fine—but taking a look at the kitchen, we can see that it hopped from 5.18GHz to 2.412GHz.

Eero is considerably more dynamic than most kits in terms of commanding willing stations to roam between frequencies and access points to try to smooth out the flow of data when the network is busy, and it did its best here—but when all four stations asked for as much data as Eero could throw at them, it got overwhelmed. Tri-band kits like Plume Superpods have an easier time here, since they've got a second 5GHz band to allocate between backhaul and fronthaul and don't need to rely on 2.4GHz as heavily (or, in many cases, at all).

Streaming torture tests

-

Delivering four 1080p video streams simultaneously is an easy job for Eero and any good mesh kit.Jim Salter

-

Delivering 4K video streams to four different places in a big house is a challenge all on its own—but Eero does a solid job of it.Jim Salter

The tests that should mean the most to someone looking to buy a router or mesh kit are our streaming torture tests. In these tests, we ask each station (Chromebook) to both request a simulated video stream and run a Web browsing workload at the same time. The streams puts pressure on the network—the 5Mbps 1080p streams shouldn't amount to much at our scale, but delivering four 20Mbps 4k streams is a significant challenge all on its own. In the sample results above, we can see that Nest Wi-Fi struggles significantly to deliver a decent streaming experience—but the most important metric isn't whether the kit can deliver a few streams, it's whether the kit can do so without screwing things up for other users in the house at the same time.

-

This is where the rubber begins to hit the road. Plume and Eero offer good Web browsing latency while streaming 1080P to all four STAs. Nest... not so much.Jim Salter

-

Trying to browse webpages while four different 4K streams are going is serious torture—for the most part, Eero does a good job of it.Jim Salter

Sure enough, this is where the biggest differences between our three test kits leap to the foreground. Nest Wi-Fi fails browsing latency tests pretty badly at multiple stations even with 1080p streams. Eero does fine at 1080p, but struggles at 4k—while the considerably more expensive Plume sails past every test smelling like a rose, due in part to its tri-band Superpods having an entire extra radio per access point to carry the load.

Conclusions

If you want to test network gear Ars-style at home, it's not that difficult to do so. The best experience to be had is under Linux, since interface connection information won't work as written under MacOS or Windows Subsystem for Linux. But the actual performance testing itself works quite well under either Linux or Windows Subsystem for Linux, and it should work under MacOS as well.

You probably don't need to have absolutely everything we do around the Orbital HQ to do an adequate job of feeling out your hardware. The extra USB Wi-Fi adapter and separate "house Wi-Fi" for command and control is nice to have, but you can still test without them. If you've got multiple laptops, net-hydra will use SSH and the whenits tool (also in the network-testing repository) to schedule simultaneous tests after the SSH sessions themselves conclude, so they won't affect your test results.

And finally, if you don't just happen to have giant stacks of laptops lying around the house... you may be able to make do with less. It's less scientific and reproducible, but there's nothing stopping you from running an actual 4K or 1080P stream on a console, tablet, or what have you to generate pressure on the network while you do browsing tests with netburn on a laptop. Go nuts!

User-generated tests unfortunately won't ever really match up apples-to-oranges with ours, since different environments produce different results. But this kind of testing can be deeply useful for readers who want to learn more about optimal placement of access points in their own house and how to make sure the Wi-Fi isn't just great for one device—but for all of them, when everyone is home and busy.

"can" - Google News

January 08, 2020 at 08:00PM

https://ift.tt/2T4K2Ys

How Ars tests Wi-Fi gear (and you can, too) - Ars Technica

"can" - Google News

https://ift.tt/2NE2i6G

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

No comments:

Post a Comment